GANDHINAGAR: What if we told you that many students who use ChatGPT aren’t trying to cheat their way to better grades — they’re actually learning more? Sounds almost unbelievable, right? Well, that’s exactly what a brand-new study has revealed.

Researchers at the University of British Columbia Okanagan (UBCO) found that when students were allowed to use generative AI tools like ChatGPT for assignments, they mostly used them responsibly and thoughtfully. Far from replacing their own thinking, they treated AI as a study buddy to make their work smoother, faster, and even more insightful.

Published in May 2025 in the journal Advances in Physiology Education, the paper — titled “Reflective writing assignments in the era of GenAI: student behaviour and attitudes suggest utility, not futility” — was led by Dr. Meaghan MacNutt, who teaches professional ethics in UBCO’s School of Health and Exercise Sciences, along with Tori Stranges, a doctoral student and lecturer at the same school. Their research offers a surprising twist in the heated global debate about AI in classrooms.

As Dr. MacNutt put it: “There is a lot of speculation when it comes to student use of AI. However, students in our study reported that GenAI use was motivated more by learning than by grades, and they are using GenAI tools selectively and in ways they believe are ethical and supportive of their learning.”

So let’s break down exactly what they found — and why it might change the way your teachers, parents, and even you think about AI in school.

Diving into the Study: How Students Used AI

So, how did they figure all this out?

The researchers surveyed almost 400 undergraduate students across three different courses at UBCO. These courses, which spanned from first to fourth year, all included reflective writing assignments – tasks that ask students to think deeply about course content, their experiences, and how they’re learning, to gain insights and develop their skills.

Crucially, all three courses had the exact same policy: students were allowed to use GenAI tools for their writing. The instructors didn’t push students to use AI or tell them not to; they simply guided them to consider how to use it effectively, ethically, and in ways that would genuinely enhance learning. Students anonymously completed surveys about their AI use on at least five reflective writing assignments.

Who Used AI and How?

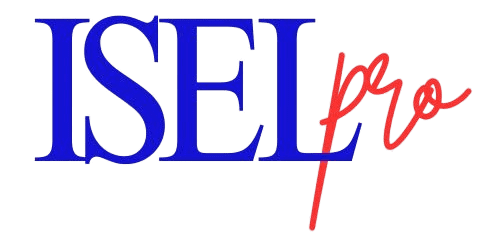

Perhaps one of the most surprising findings, especially given all the buzz around AI, is that only about one-third (33%) of the students actually used GenAI on their reflective writing assignments. That’s not everyone, or even a majority, which might go against what you’d expect to hear!

And for those who did use it, their approach was far from simply letting AI do all the work. Dr. MacNutt noted that most students used AI to kickstart their papers or to help them revise specific sections of their writing.

This means they were using AI as a tool to support their own process, not to replace it. In fact, the study found that only a tiny fraction—0.3 per cent of assignments—were mostly written by GenAI. Even more striking, none of the submissions were reported to be entirely written by GenAI.

This strongly suggests that students are being thoughtful and selective in their use, focusing on how AI can assist, rather than take over, their learning and writing process.

What Drove Students to Use AI?

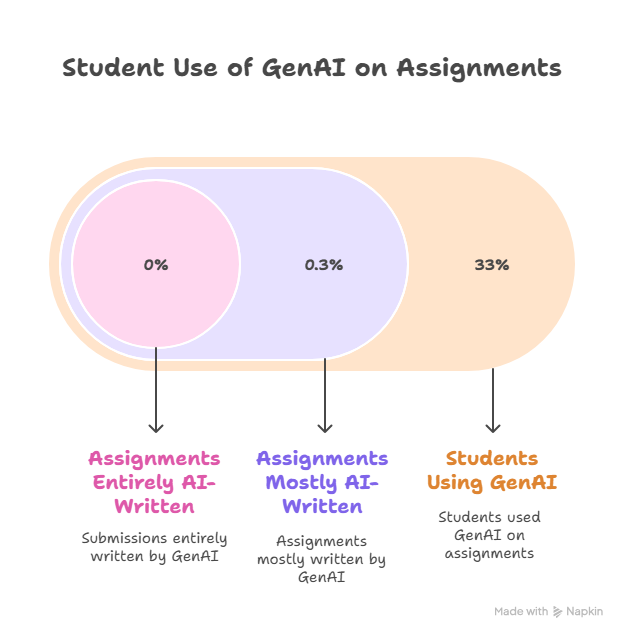

The study dug deep into why students chose to use AI. For the majority of users – a whopping 81 per cent – their GenAI use was inspired by at least one of three key factors:

- A desire to enhance learning (54% of users): Students wanted to learn more from their assignments.

- To increase the speed and ease in completing the assignment (54% of users): With demanding academic lives and mental well-being pressures, making tasks quicker and simpler was a big motivator.

- To improve their grades (38% of users): Getting better marks was a factor, but not the primary one for most.

About 15 per cent of students were motivated by all three factors.

Interestingly, while grades were a factor, students were significantly less likely to report being motivated by a desire to earn higher grades compared to a desire to learn or to make assignments easier and faster.

This finding challenges the common perception that today’s undergraduate students are overly focused on grades at the expense of genuine learning.

As Dr. MacNutt explained, “There is a lot of speculation when it comes to student use of AI. However, students in our study reported that GenAI use was motivated more by learning than by grades, and they are using GenAI tools selectively and in ways they believe are ethical and supportive of their learning. This was somewhat unexpected due to the common perception that undergraduate students have become increasingly focused on grades at the expense of learning“.

This insight is crucial because it suggests that students are not just looking for an easy way out; they’re actively seeking ways to engage with their education more effectively.

Perceived Benefits and Harms

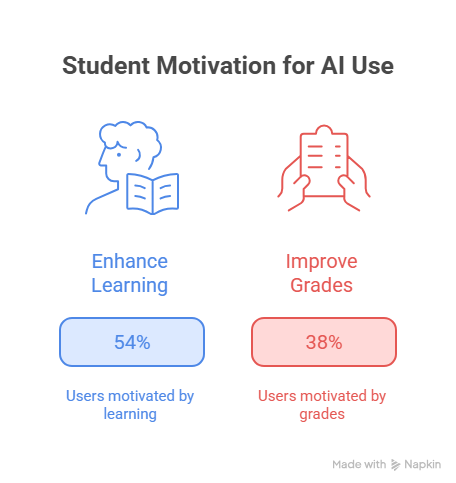

Students who used GenAI also reported various benefits. Eighty-six per cent of users found that AI helped them in at least one of the following ways:

- Helped them learn more (56% of users).

- Helped them complete assignments more quickly/easily (58% of users).

- Helped them get higher grades (33% of users).

However, the study also acknowledged that not all experiences were positive, with 10% of users reporting that they would have learned less if they hadn’t used GenAI. This “tremendous variability” in reported motivations and perceived benefits highlights that students are not all the same; they approach and benefit from AI in different ways.

Ethical Use and Future Perspectives

One of the most reassuring findings was around students’ ethical perceptions. Most GenAI users (83%) believed their use of the technology was ethical.

This “moral comfort” suggests students see AI as a legitimate aid rather than a tool for dishonesty. Very few, only 4%, expressed regret over using GenAI on their assignments.

This is a strong indicator that students are attempting to integrate AI thoughtfully into their academic work, aligning with their personal ethics and learning goals.

Interestingly, the study also asked students if they wished they had used GenAI more. Nineteen per cent of users and 38 per cent of non-users reported agreement with this statement.

This highlights a diverse range of attitudes, showing that while many are comfortable with their current usage, others—including those who didn’t use AI at all—might be open to exploring it more in the future. This variability underscores the need for ongoing conversations and education about AI’s role in academic settings.

Addressing Concerns and Future Directions

While the findings offer an optimistic view, Dr. MacNutt also raised important cautions. She pointed out that GenAI could be a valuable tool for specific student populations, such as those learning English or individuals with reading and writing disabilities. However, she also warned about the potential for new inequities: “if paid versions are better, students who can afford to use a more effective platform might have an advantage over others—creating further classroom inequities“.

This is a critical consideration for schools, as it highlights how technology, if not carefully managed, could inadvertently widen existing gaps between students.

These findings directly contradict common concerns about widespread student misuse and overuse of GenAI at the expense of academic integrity and learning. This is significant because it suggests that rather than fearing AI, educators might need to embrace it more fully.

Dr. MacNutt suggests that as AI becomes more prevalent, institutions and educators should shift their approach. Instead of a “surveillance of” students, she advocates for an approach that embodies “collaboration with” them.

This means fostering an environment where students are taught how to use AI tools effectively and ethically, rather than simply being monitored for misuse.

She further emphasizes the need for continued research in this area, especially as GenAI technologies evolve. It’s crucial to understand the diverse backgrounds students come from and how they might benefit or be harmed by these technologies.

“But as we move forward with our policies, or how we’re teaching students how to use it, we have to keep in mind that students are coming from really different places. And they have different ways of benefiting or being harmed by these technologies,” she stressed. Understanding this “mosaic” of student experiences, motivations, and perceptions is key to developing inclusive and equitable strategies for AI integration in education.

What This Means for You and Your School

This study offers a powerful message: AI in education doesn’t have to be a battle between students and teachers. Instead, it can be a partnership.

For you, it means that using AI tools to help you learn, organize your thoughts, or make your writing clearer could become a more accepted and encouraged part of your academic life.

Imagine using AI to brainstorm ideas for an essay, to get feedback on your draft, or even to help you understand complex concepts by asking it questions.

For your teachers and schools, it means rethinking policies and embracing new ways of teaching. It’s about creating an environment where the incredible promise of AI to enhance learning is realized, while also addressing the challenges of equity and ethical use head-on.

By understanding your behaviors and attitudes, educators can better design assignments and support systems that maximize AI’s benefits and minimize any potential downsides.

Ultimately, the UBCO study offers a hopeful vision: one where AI is not just “here to stay” but is actively embraced by students as a tool for deeper learning, efficiency, and ethical academic growth. It’s a call for collaboration, understanding, and a forward-thinking approach to technology in education, ensuring that you, the students, are at the heart of how these powerful tools shape your future learning experiences.